- #Install spark on windows anaconda install#

- #Install spark on windows anaconda zip file#

- #Install spark on windows anaconda update#

- #Install spark on windows anaconda download#

- #Install spark on windows anaconda free#

PySpark just connects remotely (by socket) to the JVM using Py4J (Python-Java interoperation). x)." Java is a must for Spark + many other transitive dependencies ( scala compiler is just a library for JVM). You will need to use a compatible Scala version (2.10. Before you start installing Scala on your machine, you must have Java 1.8 or greater installed on your computer. Scala can be installed on any UNIX flavored or Windows based system.

#Install spark on windows anaconda zip file#

#Install spark on windows anaconda download#

Download Scala binaries from / download/.

#Install spark on windows anaconda install#

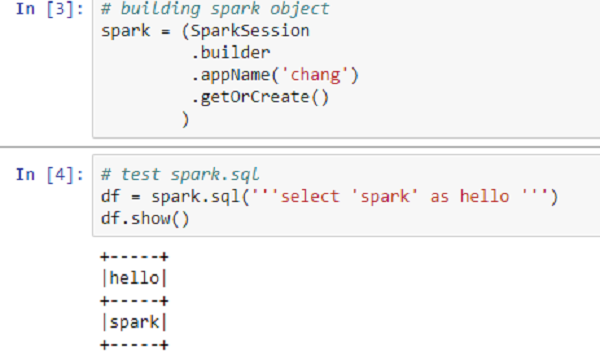

Import the PySpark module to verify that everything is working properly.In respect to this, how do I download and install Scala on Windows?ĭownload and install Scala on Windows Verify the JDK installation on your windows machine by typing the following commands in the command prompt. Open the Jupyter on a browser using the public DNS of the ec2 instance. Head to your Workspace directory and spin Up the Jupyter notebook by executing the following command. Type and enter pyspark on the terminal to open up PySpark interactive shell:

home/ubuntu/spark-2.4.3-bin-hadoop2.7/bin:/home/ubuntu/anaconda3/condabin:/bin:/usr/bin:/home/ubuntu/anaconda3/bin/ If your overall PATH environment looks like what is shown below then we are good to go, Make sure the PATH variable is set correctly according to where you installed your applications. The Spark Environment is ready and you can now use spark in Jupyter notebook.

#Install spark on windows anaconda update#

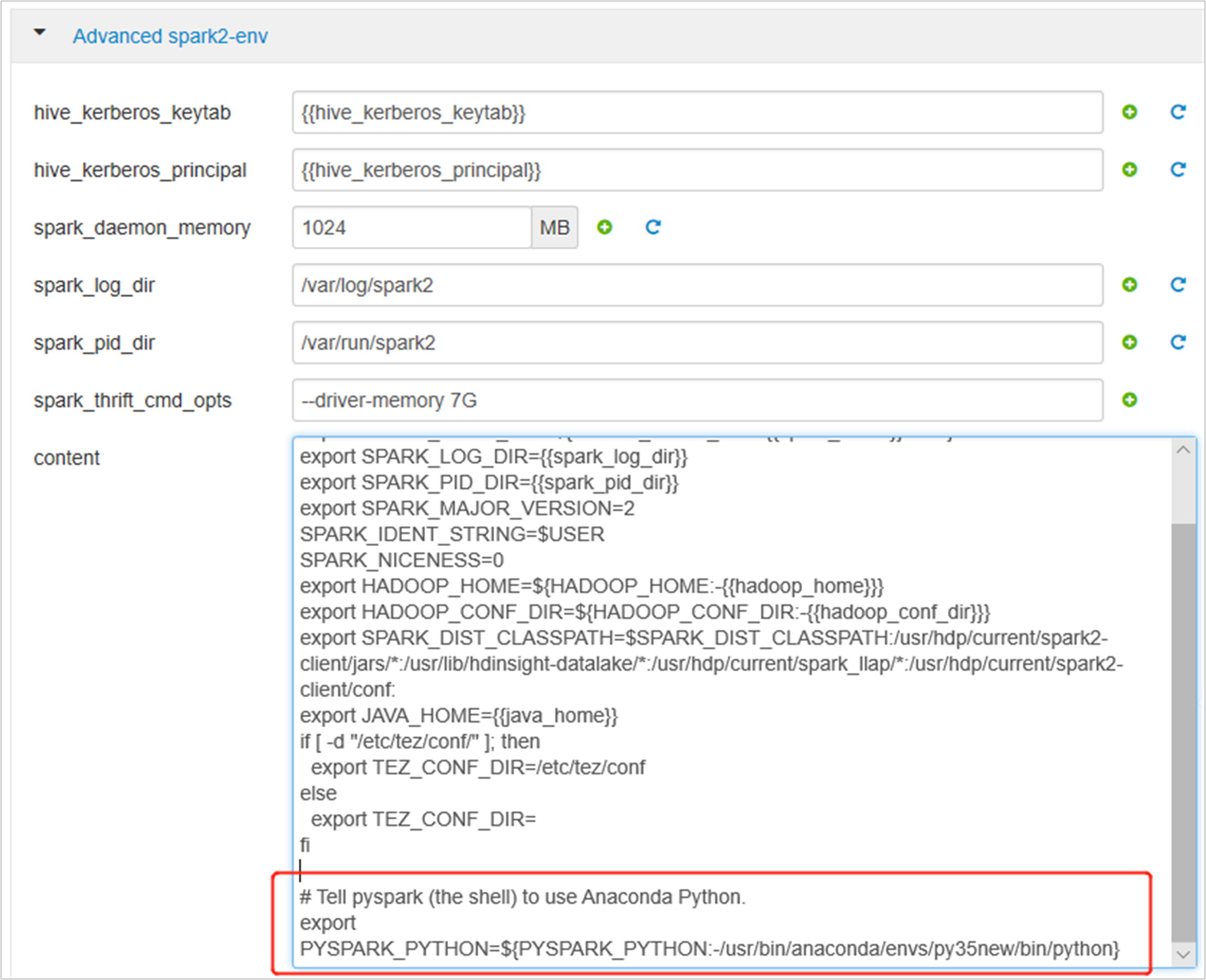

Set the SPARK_HOME environment variable to the Spark installation directory and update the PATH environment variable by executing the following commandsĮxport SPARK_HOME=/home/ubuntu/spark-2.4.3-bin-hadoop2.7Įxport PYTHONPATH=$SPARK_HOME/python:$PYTHONPATH Validate PySpark Installation from pyspark shell Step 6. Download & Install Anaconda Distribution Step 2. Mv spark-2.4.3-bin-hadoop2.7 /home/ubuntu/ Steps to Install PySpark in Anaconda & Jupyter notebook Step 1. Sudo tar -zxvf spark-2.4.3-bin-hadoop2.7.tgz Copy one of the mirror links and use it on the following command to download the spark.tgz file on to your EC2 instance.Įxtract the downloaded tgz file using the following command and move the decompressed folder to the home directory. Head to the downloads page of Apache Spark at and choose a specific version and hit download, which will then take you to a page with the mirror links. Once you are in conda, type pip install py4j to install py4j. If not type and enter conda activate.To exit from the anaconda environment type conda deactivate You will see ‘(base)’ before your instance name if you in the anaconda environment. To install py4j make sure you are in the anaconda environment.

We also need to install py4j library which enables Python programs running in a Python interpreter to dynamically access Java objects in a Java Virtual Machine. Install Scala by typing and entering the following command :

You will be able to see a similar output as follows: Verify the installation by typing java -version. On EC2 instance, update the packages by executing the following command on the terminal: Ssh -i "security_key.pem" sure to put your security key and your public IP correctly. To connect to the EC2 instance type in and enter : Let’s install both onto our AWS instance.Ĭonnect to the AWS with SSH and follow the below steps to install Java and Scala. To install spark we have two dependencies to take care of. Once you are done through the article follow along here. Make sure to perform all the steps in the article including the setting up of Jupyter Notebook as we will need it to use Spark.

#Install spark on windows anaconda free#

Setting Up A Completely Free Jupyter Server For Data Science With AWS.Follow the link below to set up a full-fledged Data Science machine with AWS. We have already covered this part in detail in another article. The first thing we need is an AWS EC2 instance.

In this article, we will learn to set up an Apache Spark environment on Amazon Web Services. It also supports a rich set of higher-level tools including Spark SQL for SQL and structured data processing, MLlib for machine learning, GraphX for graph processing, and Spark Streaming. It has high-level APIs for programming languages like Python, R, Java and Scala. It allows data-parallelism with great fault-tolerance to prevent data loss. Apache Spark is a framework that is built around the idea of cluster computing.

0 kommentar(er)

0 kommentar(er)